Fast logging

Everyone loves logs, but everyone hates logging. Logging is such a performance hindrance to get right I share here some napkin drawings of how to do it relatively fast.

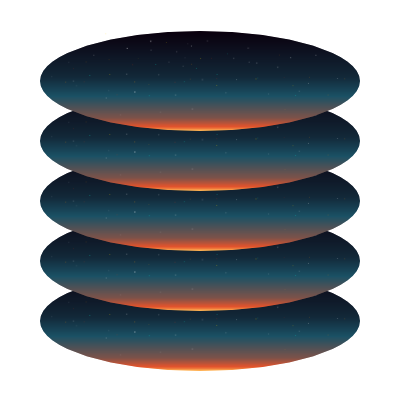

So here is a peel-out of that diagram:

Disruptor

A disruptor is a design pattern where a ring buffer is bounded by 4 limits:

- a reader trailer

- a reader lead

- a writer trailer

- a writer lead

The reader trailer is the bound for the writer lead to progress, the space between the reader trailer and reader lead are being read right now.

The writer trailer is the bound for the reader lead to progress, the space between writer trailer and writer lead are being written right now.

It basically allows several readers to read at once and several writers to write at once while avoiding accidental overlap of the reader and writer side. Threads would only wait for threads that started the same operation as them before they did, so you only wait for operations that are already deep in progress.

This design pattern was made in Java first by LMAX.

Disruptors are blazingly fast queues, here we use one as a large IO buffer, the single reader means we only need a single atomic for the reader side.

Register, source and sink

The register is a factory/singleton that registers one sink per tag. The goal is to have one type of tag for each type of log. You can grab a source for a sink provided you know the tag for it.

This mean you can have several logs configured differently for different things. Each sink has its own thread. Reading the disruptor should be strategized. It should forcefully try to read even when exhausted for a few cycles before going into yielding or waiting.

The source is the writer side of the logger, the sink is the collector that outputs to a file or terminal.

In an ideal case, the source pads the data with spaces to align it with the hardware destructive interference size before issuing a new line at the end of that padding. This will prevent misswriting in the RAM by making the target pointer aligned to cache-line sizes.

Keep in mind that newlines may not be 1 byte. They are 2 bytes on windows.

Sink strategies

Direct IO

Direct IO is used to write to disk immediately, not going through the system cache. This is perfect for logs that must survive an operating system crash.

This is an uncommon usecase, but you should be prepared to having this.

Embracing the page buffer

You can use the system page buffer to your advantage, use whatever goes into the system first and dump as much into it as possible.

This means whatever is written there will go to disk if your process crashes and hence survive crashes once written. You will however lose what is in the buffer, so my advice is to allocate that buffer in a memory mapped file and to be prepared to read garbled logs from said file.

IO uring

For logs where you are ready to lose any data, IO uring will keep the IO data to be written in userspace and dump it as fast as possible in the drive without getting interrupted by the OS. But that means some things may be lost, anything in the ring will be lost on crash and may not be in your file mapped buffer either. Use for unimportant stuff.

IO uring is Linux specific.

External sink

With this strategy, the sink is not another thread, but another process entirely. The sink is hence a noop.

The actual sink is a process that mounts the same shared memory area as the writers and uses it for reading the input.

Source strategies

There is not a lot to say about the strategies for the sources. The most important bit is the following: we want 2 ways to deal with an overflow of logs: a waiting strategy that waits for the sink to process logs, and a non-waiting strategy that will just cut the log in place with a newline at the start and keep writing over the data[1].

Both these strategies have their own issues and benefits: overwriting is very fast, but we may lose data (and hence be unable to measure how fast we are because no acurate logs); waiting is slower[2], but every log message in full and accurate.

Conclusion

If you want, I may develop this as a C and C++ library. Give me a nudge by saying if you want it here https://cloud.nekoit.xyz/apps/forms/WCFt9q4SrRQ3yyDp.

The next post is going to be a bit more technical: how to format logs fast enough that it matters (thanks to Vincente from Metacall for these prompts on logging). Dragonbox, stringification algorithms and all will be on the menu!

Overwriting is especially powerful if the sink is another process because it allows you to process the logs only if you need it by attaching the listener to the memory mapped file. That means ignoring logs most of the time and either processing logs after crash (on the now no longer written to file) or when debugging. ↩︎

Slower doesn't mean actually that slow. The waiting should be parametrized to be either a spinlock or a yield or spinning then yielding. That is a strategy for a strategy which is why strategy is the best of all design patterns ever along with type-erasure (opinions may vary). ↩︎

If you like my content and want more, please donate. If everyone that finds my content useful paid $1 every week, I would be able to produce content for 1 week a month without relying on other sources of income for that week.